Doctoral Dissertation: "Charting chronicity: A Thematic Analysis of Clinical Pain Assessment Tools and Patient Documentation Design"

"Technical and Professional Communication Teachers are Good at AI Detection, But Not Good Enough: An Empirical Analysis Using Student-Written and AI-Generated STEM Reports."

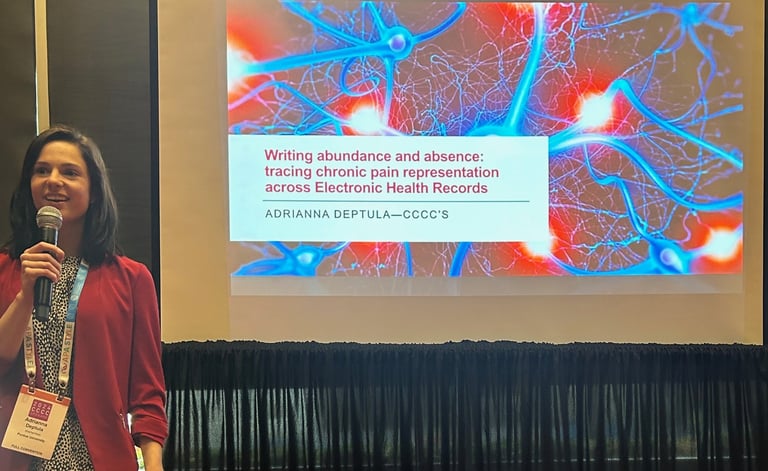

Scholars in Rhetoric of Health and Medicine (RHM) and Disability Studies have long focused on medical discourse and how it shapes perceptions of chronic disease. According to the Centers for Disease Control and Prevention (CDC), pain is the leading cause of disability and seeking medical care today. Widespread disparities exist for chronic pain assessment across race, gender, class, and sexuality, especially for female-identifying patients whose discomfort is often downplayed or dismissed. My dissertation investigates how clinical patient documentation rhetorically frames chronicity and the body in pain. Ultimately, my study reveals how the design of assessment documentation contributes to these disparities from a rhetorical perspective.

While pain has been studied ideologically (Graham & Herndl, 2011, 2018) across clinical practice, using multiple ontologies and stasis theory, very little rhetorical work exists that studies the pain assessment process. As a rhetorical scholar, I am interested in the written, technical decisions of patient intake forms and pain scales that drive the assessment process—My research hones in on how routine documentation practices shape the assessment decisions that are made in the first place. I conduct a thematic analysis that maps out the major narratives across these technical artifacts, paying close attention to how their content and design map onto the rhetorical concepts of kairos (timeliness), chronos (quanitative time), and hexis, (one’s bodily state). Conducting a thematic analysis affords new humanistic insights into how pain, Western notions of health and wellness, and disability are baked into seemingly objective forms of medical documentation.

My major findings reveal that U.S pain and spine documentation still largely value kairotic time instead of the “flux” or physical uncertainty that comes with living with chronic pain, disability, or other long-term medical conditions. I argue that while these clinical tools are efficient from a biomedical perspective, they frame pain as an acute, yet predictable problem in need of remedy. In doing so, these technical genres possess the agential power to perpetuate provider biases, reinforce stigma, and promote ableist conceptions of the body. Consequently, I propose that pain assessment documentation be enhanced through a Patient Experience Design (PXD) framework to better capture the embodied precarity and “flux” experienced by patients with chronic conditions. PXD seeks to enhance the usability of technical tools and documentation from a human-centered, patient perspective. (Melonçon, 2017). You can read my dissertation here.

My research in Rhetoric of Health and Medicine (RHM) naturally lends itself to other scholarly conversations of access and equity. One’s health literacy and access to care often dictate their quality of life. This is especially true in rural areas where a shortage of healthcare locations and medical professionals contribute to chronic health conditions and a lack of ongoing care. This project addresses the communication challenges faced by rural healthcare clinics in North Central United States, particularly in Indiana, where geographic isolation, financial constraints, and limited resources lead to healthcare disparities.

For Phase 1 of this project, I and my research team—Drs. Richard Johnson-Sheehan, Thomas Rickert, and Paul Hunter—have snowball recruited, surveyed, and interviewed a range of health care practitioners working with rural populations to identify their daily communication needs. These practitioners often struggle with inefficient communication networks, generic patient education materials, and a lack of specialized resources. Our aim is to develop rhetorically-driven strategies in which generative artificial intelligence can be used to increase the bandwidth of medical staff and improve communications between providers and patients. The project involves three phases: assessing current communication practices, developing clinical GenAI methods to strengthen these practices, and educating healthcare professionals on critically implementing AI. Phase 2 of this project will involve developing a locally hosted large language model to tailor patient education materials to better fit the contextual needs of rural patients.

In October of 2024, we presented our initial findings at the Council for Professional, Technical, and Scientific Communication conference. One publication, "Navigating methodological mutability: Researching rural healthcare written communication in the age of generative artificial intelligence" is under final review at the Journal of Written Communication.

One of the most rewarding aspects of this project has been onboarding and mentoring five undergraduate research students from Purdue’s John Martinson Honors College to contribute to this ongoing project.

"Enhancing Rural Healthcare by Incorporating Generative AI and Machine Learning: Building Stronger Communication Networks"

Challenges persist in integrating large language models into writing education and distinguishing between AI-generated and student-produced texts. I and my co-collaborators—Dr. Mason Pellegrini, Dr. Paul Thompson Hunter, and David Rowe—seek to answer the following research questions:

Can writing instructors at U.S. colleges accurately differentiate between synthetic (AI-generated) and authentic (student-written) STEM reports without contextual cues?

What strategies, attested to by writing instructors, serve to accurately differentiate between synthetic and authentic texts?

What strategies, attested to by writing instructors, are found to be ineffective for such differentiation?

Are there verifiable relationships between U.S. college writing instructors' teaching experience, education level, AI familiarity, or other demographic items and their success in differentiating between authentic and synthetic texts?

Our research is based on the recently articulated concept of Rhetorical Authenticity, an AI-informed theory that synthesizes Erich Fromm’s true/pseudo self and Aristotlean ethos (Deptula et al., 2024). We recently received an ACM SIGDOC Career Advancement Research Grant for Phase 1 of this project. Funding was used to examine expert audiences’ perceptions of GenAI produced STEM reports via 400 “trials” in which participants attempted to differentiate between student-written and AI-generated text excerpts. The student-written texts were drawn from the Michigan Corpus of Upper-Level Student Papers (MICUSP) and paired with parallel AI-generated excerpts generated by DeepSeek-R1. Instructors completed a series of survey questions, using a Likert scale to rank their confidence of whether each text excerpt was created by a student writer or AI. Study participants also provided brief open-responses on how they came to their conclusions.

Our study aims to provide both qualitative and qualitative insights related to instructional expertise, best assessment practices, and teaching authenticity in technical and professional communication contexts. This October, we will present our quantitative findings at the Special Interest Group on Design Communication (SIGDOC) conference.